Hadoop Environment (hdenv) was a bash shell helper script for Hadoop that made switching between clusters and executing common commands easier.

http://code.google.com/p/hadoopenv/

The project was a set of utilities meant to make working with multiple remote Hadoop clusters from the command line easier. It relied primarily on features of later versions (3.1+) of the bash shell environment.

It allowed you to interact with a remote hadoop cluster - without ssh’ing to the primary name node and using its hadoop client install. This was incredibly useful and avoided having to manually transfer your jars from your desktop computer to a node in the cloud just to run a job.

Key Features:

- Maintain a list of clusters you connect to, pick from a list

- Remembers last cluster used

- Provides remote interaction with cluster - including HDFS with auto-completion (thanks to the bash completion feature and these settings for hdfs: http://blog.rapleaf.com/dev/2009/11/17/command-line-auto-completion-for-hadoop-dfs-commands/)

- Uses the standard hadoop client script provided in all Hadoop 0.20.1+ releases

Presentation

See: Presentation Slides

Hadoop Environment

Bash $hell Helper Scripts for Hadoop

http://code.google.com/p/hadoopenv/

What Does it Do?

- Just a wrapper for hadoop utility

- e.g. hadoop-0.20.1/bin/hadoop

- Requires a working hadoop script

- Remotely access clusters

- submit and manage jobs

- Access HDFS, auto-complete on remote paths

- Manages information about clusters

- Facilitates easy switching between clusters

Setup

- Download [http://code.google.com/p/hadoopenv/]

- See README for install

~/.hdconf/cluster.csv— lists clusters you know about- Pick a cluster:

$. hdenv.shcluster-name - Source hdenv.sh in your bash profile and it will set your environment to the last cluster picked

General Usage

- Commands:

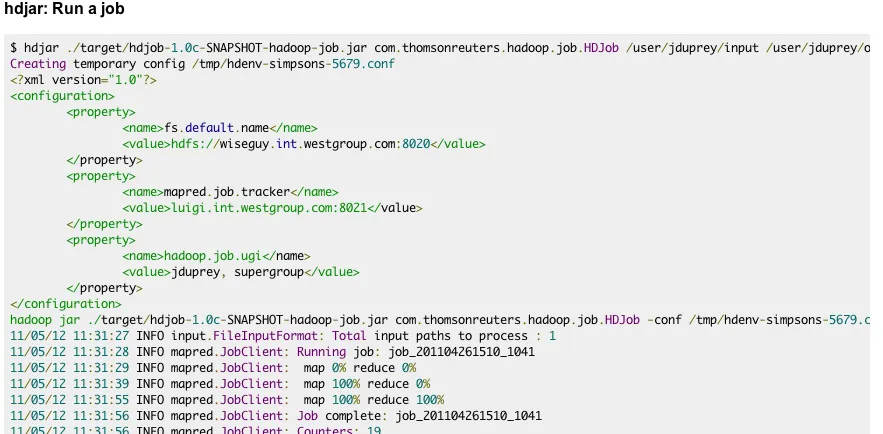

hdclusterslists known clusters to pick fromhdwhichlists the currently selected clusterhdfshadoop fs with autocompletionhdjarsubmit a job jar to the selected cluster

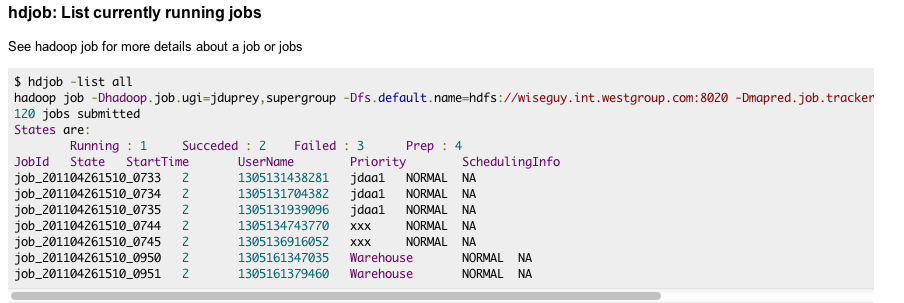

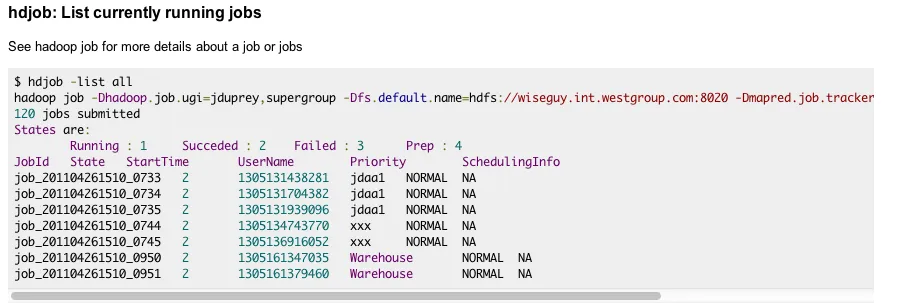

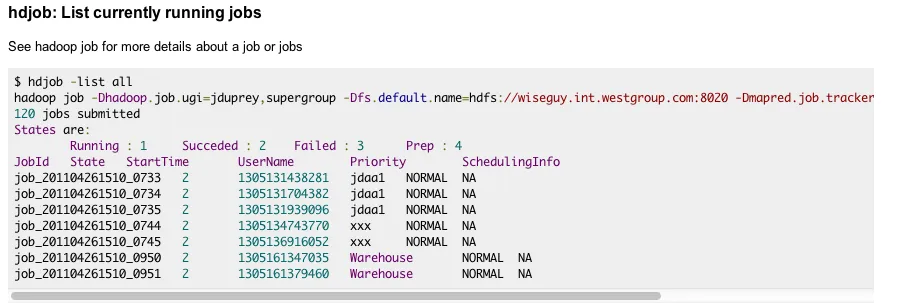

hdjobhadoop job

doJob.shsubmits job jar, collects job conf, reports exit status, etc.jobrpt.shdownloads completed job’s task logshdwebopens tracker web site for selected clusterhdwebfopens namenode of selected cluster

Demo

- Demo some sample usage…

- hdclusters, hdwhich, hdweb*, hdfs, hdjar, doJob.sh, jobrpt.sh,…

Gotchas

- Your jobs will inherit your local settings

- adjust your local settings accordingly

- E.g. conf/hdfs-site.xml (dfs.replication)

- adjust your local settings accordingly